Prediction Models as a Service

Prediction Models as a Service are mathematical or computational systems meant to predict future results by studying tendencies, interactions, and patterns inside datasets. Many industries, including finance, healthcare, advertising, and meteorology, depend heavily on these devices. Usually according to their learning method and process, prediction models are divided across applications. Linear regression forecasts a constant result depending on independent variables. For binary classification issues, logistic regression is applied. Time series models forecast future values depending on previous tendencies (e. g., ARIMA, Exponential Smoothing).

kinds of forecasting models.

- Using algorithms, machine learning models learn patterns from data and forecast. Some ways include using the automatic readout,

- Random Forests and Decision Trees applied in classification and regression jobs.

- Support Vector Machines (SVMs)—good for high dimensional classifications.

- Deep learning applications including image recognition and speech processing use neural networks.

- Deep Learning Models – These models use several layers of synthetic neural networks to analyze complex data. Other ones are:

- Convolutional Neural Networks help with image processing.

- Ideal for sequential data—including natural language processing (NLP)—Recurrent Neural Networks (RNNs)

- Hybrid models merge various techniques—including statistical models and machine learning—to better forecast accuracy.

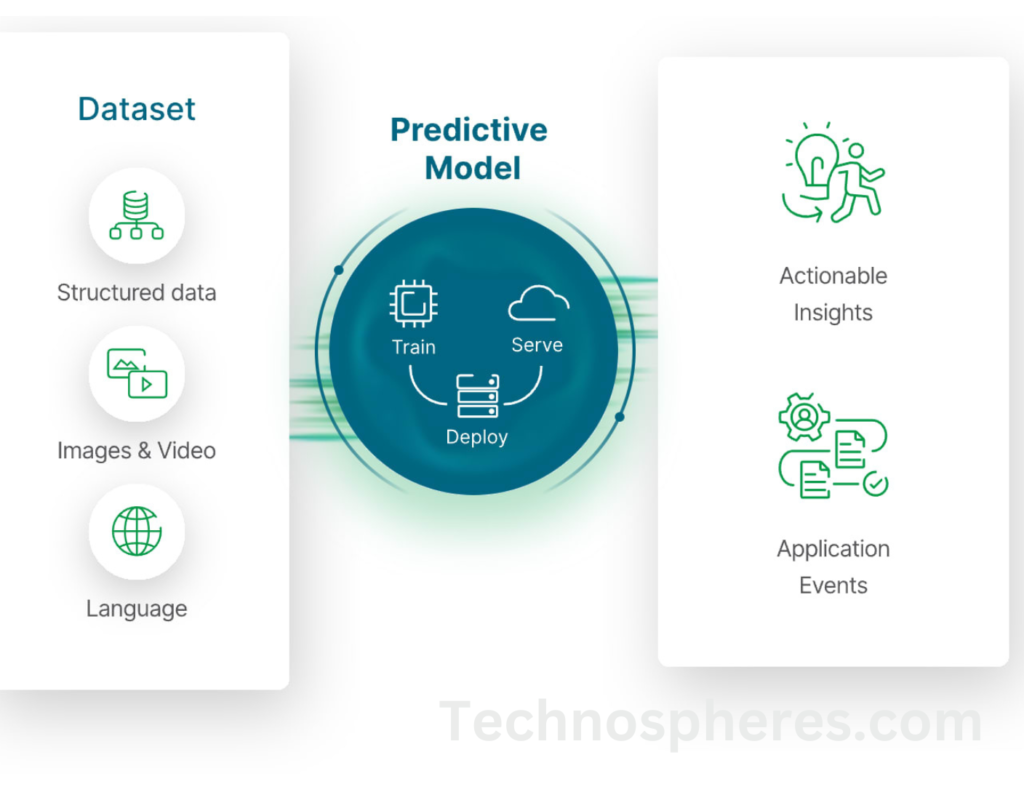

Key Components of Prediction Models

- Data Preprocessing – Cleaning and organizing data to remove noise and inconsistencies.

- Feature Engineering – Selecting and transforming relevant variables to enhance model performance.

- Model Training – Using historical data to teach the model how to make predictions.

- Validation and Testing – Evaluating model performance using separate datasets.

- Deployment – Integrating the model into a system or application for real-world use.

Evolution of Prediction Models as a Service (PMaaS)

Prediction Models as a Service (PMaaS) is a cloud-based offering that allows businesses and individuals to access pre-built or customizable prediction models without requiring in-depth expertise in data science or machine learning.

Evolution of PMaaS

- Early Stages: On-Premise Prediction Models

- Initially, organizations built and maintained prediction models on their own infrastructure.

- Required significant computational power and expertise.

- Limited scalability and high costs.

- Development of AI services and cloud computing

- AI and machine learning features were made available on cloud platforms like Amazon Web Services, Google Cloud, and Microsoft Azure.

- Allowed organizations to develop, train, and deploy models in the cloud.

- Reduced infrastructure costs and improved accessibility.

Development of PMaaS

- Service providers started offering pre-built models that could be used via APIs.

- Automated tools for model selection, training, and deployment became available.

- Enabled businesses to integrate prediction capabilities into applications without deep technical knowledge.

Current Trends in PMaaS

- Automated Machine Learning (AutoML): Google AutoML and H2O.ai, among other platforms, enable users to create prediction models free from human feature engineering.

- Explainable artificial intelligence (XAI): Increasing request for openness in AI models.

- Developing forecasting models closer to end users by running them on edge equipment: Edge AI.

Advantages of PMaaS

- Cost-Effective: No need for expensive infrastructure or data science teams.

- Scalability: Can handle large datasets and scale resources as needed.

- Ease of Integration: API-based services simplify adoption in business workflows.

- Continuous Improvement: Cloud providers update and optimize models over time.

Importance and Use Cases of Prediction Models

Importance of Prediction Models

- Datadriven Decision Making: Supports Companies in Making Informed Decisions on Data Insights.

- Risk management: finds possible financial, medical, and cyber risks.

- By automatically forecasting results, automation helps to cut down on human effort in repetitive duties.

- Personalization increases customer satisfaction by suggesting customized solutions and products.

Use Cases of Prediction Models

Healthcare

- Disease prediction

- (e.g., cancer detection using AI).

- Patient readmission forecasting.

- Drug discovery and personalized medicine.

Finance and Banking

Credit risk assessment.

Stock market predictions.

Fraud detection and prevention.

Retail and E-commerce

- Demand forecasting.

- Personalized recommendations.

- Inventory management.

Marketing and Customer Analytics

- Customer churn prediction.

- Sentiment analysis for brand perception.

- Supply chain optimization.

- Quality control using anomaly detection models.

Weather Forecasting and Disaster Management

- Climate change modeling.

- Natural disaster prediction and preparedness.

- Real-time weather monitoring for agriculture.

Cybersecurity

- Threat detection and anomaly identification.

- Fraudulent transaction monitoring.

- Identity verification using biometric predictions.

Basics of Predictive Models

A forecast of future results drawn on past data can be given by prediction models, which are mathematical structures or algorithms. These models find trends in data trophies and apply them to provide wise forecasts about unknown values. Many industries, including financial, healthcare, marketing, and engineering, use prediction models to help decision making.

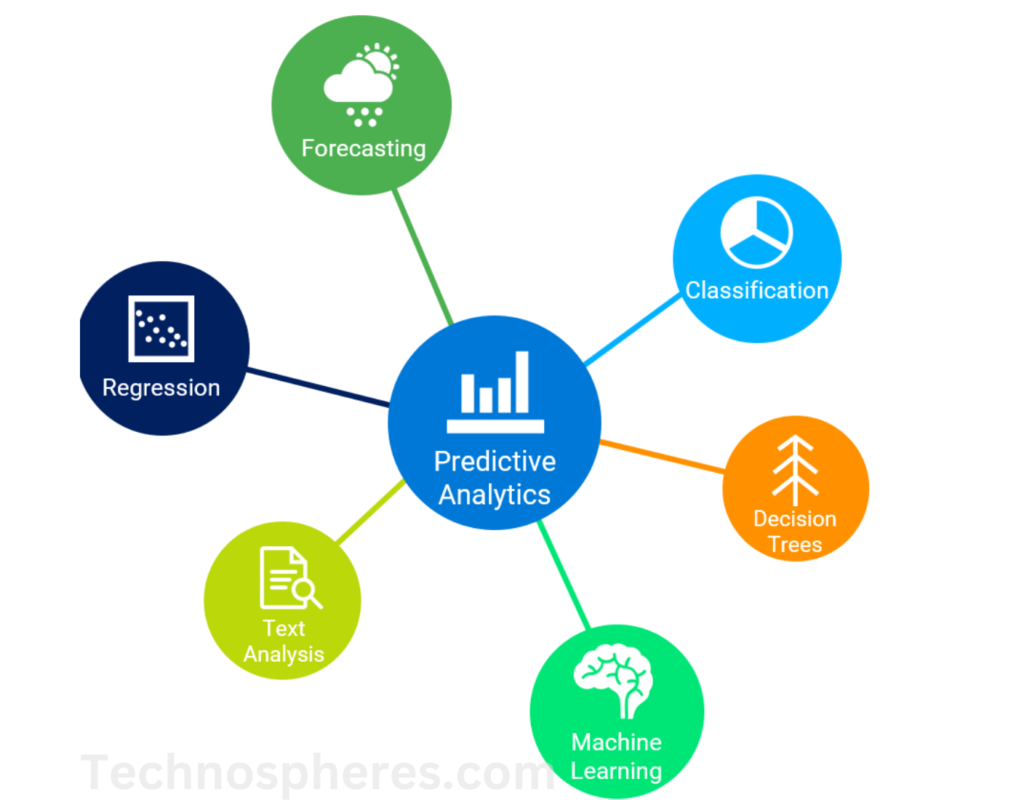

Predictive Models Kinds

Based on their design, learning style, and intent, prediction models can be organized into several classifications. Among the most usually seen varieties are:

- A straightforward approach that fits the data with a straight line following the equation is linear regression:

- y = aX + by = ay + xb=aX+b

- where XXX is the independent variable, YYY is the dependent variable, and a, ba,ba, ba,b corresponds.

- Polynomial regression extends linear regression by fitting a polynomial curve to grasp more sophisticated relationships.

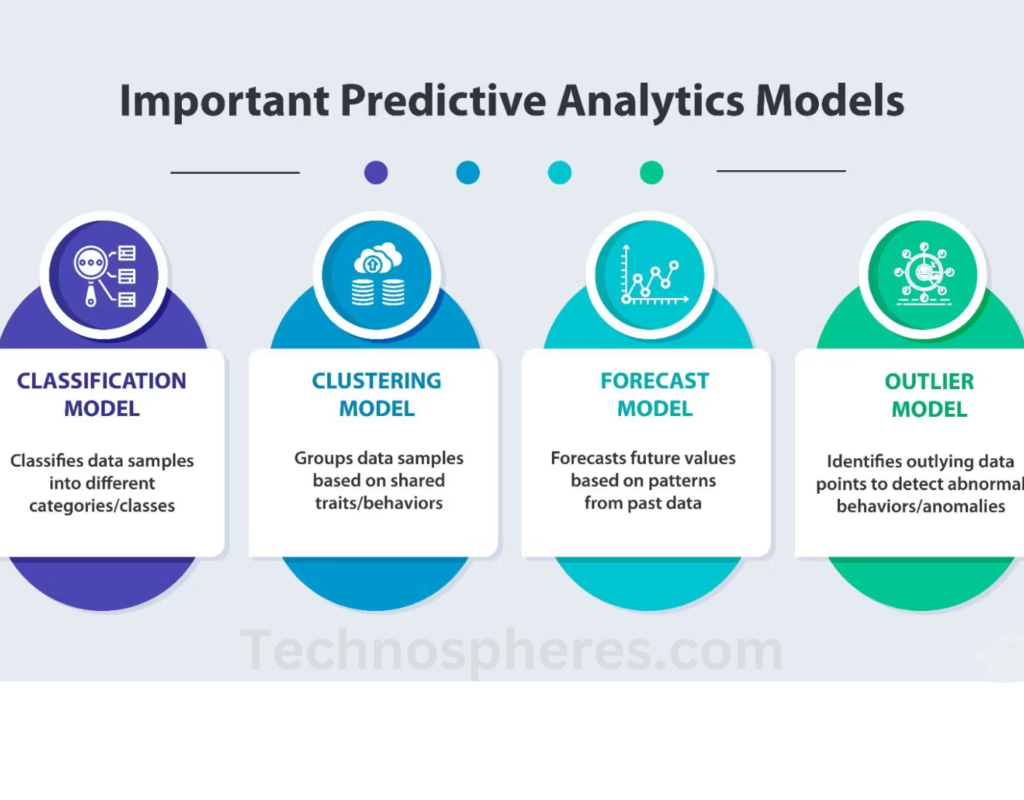

Classifying approaches

Classification models forecast categorical results. These models are applied when the target variable is made up of labeled or discrete values. This refers to:

- Decision trees: a treelike format where each node represents a decision and results in a final classification.

- Random Forest is a group of decision trees that enhances accuracy by helping to avoid overfitting.

- Support Vector Machines (SVM): Finds the optimal boundary (hyperplane) for dividing classes in the data.

Analysis of timeseries models

Based from historical time dependent data, time series models forecast future values. Stock market analysis, demand forecasting, and weather predictions are typical applications for these tools. Examples of:

- ARIMA (Auto Regressive Integrated Moving Average) uses autoregression, differencing, and moving averages to represent time sensitive data.

- Exponential Smoothing consists of giving progressively lower weights to earlier observations to help level data variations.

Models based on deep learning

Particularly useful for difficult data including images, text, and speech, these models forecast using artificial neural networks. Some cases consist of

- Image classification and object detection provide uses for convolutional neural nets.

- For sequential data including speech and text processing, Recurrent Neural Networks (RNNs) are used.

Mentored vs. Not under supervision

Based on their data processing, machine learning models are generally split into supervised and unsupervised learning.

Supervisory Learning

The model is trained with labeled data in supervised learning, so the input data has been known output labels. We seek to grasp from input to output the mapping function.

Characteristics:

- Requires labeled datasets.

- Used for classification and regression tasks.

- Learns from historical data and applies it to new data.

Examples of Supervised Learning Algorithms:

- Linear and Logistic Regression (for predicting numerical and categorical values, respectively).

- Decision Trees & Random Forests (for classification problems).

- Neural Networks (for complex pattern recognition).

Use Cases:

- Email spam detection (spam or not spam).

- Fraud detection in banking.

- Medical diagnosis (identifying diseases from symptoms).

Unsupervised Learning

Unsupervised learning deals with unlabeled data, meaning the algorithm must find patterns, structures, or clusters within the data without predefined labels.

Characteristics:

- No labeled outputs.

- Finds hidden structures in data.

- Used for clustering, association, and dimensionality reduction.

Examples of Unsupervised Learning Algorithms:

- K-Means Clustering (for grouping similar data points into clusters).

- Hierarchical Clustering (builds a tree of nested clusters).

- Principal Component Analysis (PCA) (reduces data dimensionality while retaining important features).

Use Cases:

- Customer segmentation in marketing.

- Anomaly detection in cybersecurity.

- Recommender systems (e.g., Netflix suggesting similar movies

Common Algorithms Used in Prediction Models

Predictive modeling typically depends of several algorithms, each with varied strengths and applications. Among the most common ones are:

Linear regress

- Appropriate for forecasting continuous number values.

- Bases on a linear correlation between output and input.

Logistic regression.

- For binary classification issues, we’ve deployed.

- Utilizes the logistic (sigmoid) function to estimate probabilities.

Decision trees.

- In a treelike design, every internal node stands for a decision, every leaf node for an outcome.

- Basic to grasp and apply.

Support vector machines ( SVM)

- Identifies the top hyperplane dividing various classes in a dataset.

- Effective in high dimensional spheres.

Naïve Bayes

- A probabilistic classifier based on Bayes’ theorem.

- Works well for text classification (e.g., spam filtering).

K-Means Clustering

- Groups data points into K clusters based on similarity.

- Used in customer segmentation and market analysis.

Neural Network & Deep Learning

- Uses several levels of synthetic neurons to understand complicated patterns.

- Perfect for jobs including speech processing, image recognition, and self driving.

Explanation of Prediction Models as a Service

A cloud based service, Prediction Models as a Service (PMaaS) lets businesses and developers access and use forecast models without having to create, train, or maintain them. It offers an on demand framework whereby users can use pretrained models or run their own models without concern of the underlaid infrastructure, computational, or scalability issues.

Created to democratize artificial intelligence (AI) and machine learning (ML), PMaaS makes forecasting abilities available to businesses without the means or knowledge to produce their own ML models. Easily integrated into apps through APIs, these solutions are frequently delivered for tasks including demand forecasting, fraud detection, recommendation systems, among others.

Major Characteristics and Abilities

Among several capabilities that improve their user experience, performance, and scalability, are features available in PMaaS solutions:

- Pretrained models—some PMaaS companies provide a variety of such that can be used straight away for standard tasks including image recognition, natural language processing, and predictive analytics.

- Users are allowed to upload and publish their own machine learning models without concern about infrastructure management.

- Performance Optimization and Scalability: Cloud based PMaaS options dynamically adjust to meet different levels of need, therefore guaranteeing steady performance for live predictions.

- Most PMaaS packages have REST or Graph QL APIs, which makes it simple to include forecast abilities into corporate software, mobile applications, and website applications.

- Some systems guarantee accuracy and relevance over time by constantly improving models with fresh information by retraining them.

- PMaaS services guarantee data security, industry standard compliance (GDPR HIPAA, among others), and safe model usage access controls.

- Rather than purchasing costly equipment and data science teams, companies can pay only for the predictions they want, thus lowering running expenditures.

- For flexibility and regulation requirements, some PMaaS solutions enable deployment across various cloud vendors or on premise infrastructure.

How PMaaS Differs from Traditional Machine Learning Deployment

PMaaS differs from traditional ML deployment in several key ways:

| Feature | PMaaS | Traditional ML Deployment |

| Infrastructure Management | Fully managed by the provider | Requires setting up servers, GPUs, and storage |

| Model Training | Often includes pre-trained models and automated training | Requires manual training and tuning |

| Deployment Time | Rapid deployment via APIs | Time-consuming setup and maintenance |

| Scalability | Automatically scales as needed | Requires manual scaling and resource allocation |

| Cost Structure | Pay-as-you-go, lower upfront cost | High initial investment in hardware and expertise |

| Maintenance | Handled by the provider, including updates and monitoring | Requires ongoing maintenance and debugging |

| Security and Compliance | Managed by the cloud provider with compliance guarantees | Requires in-house security management |

Model Serving Infrastructure and APIs

A trained predictive model has to be deployed so that applications can use it. This is managed using model serving architecture and APIs.

Model Serving APIs

- By means of application programming interfaces (APIs), external applications may submit data to a model and get forecasts. Key points are

- Most frequently used, RESTful APIs let programs communicate with models over HTTP.

- Used when flexible queries are needed and let clients exactly define the information they need, GraphQL APIs become available.

- gRPC is an advanced API framework that enables fast real time model serving by means of faster communication.

Model serving infrastructure

- The infrastructure for offering models needs to guarantee speed, efficiency, and dependability. Principal factors are:

- Models run well on servers: Among the frameworks in use are TensorFlow Serving, Torch Serve, and NVIDIA Triton.

- Models are usually installed in Docker containers, therefore they are mobile and scalable.

- Dynamic scaling of model deployments is enabled by Kubernetes, which also help to organize them.

- Load balancers distribute prediction requests among many servers to address heavy load.

- For situations demanding low latency forecasts (for example, Internet of Things), models could be deployed near to users at the edge.

Imperative Processing Pipelines

PMaaS relies on data processing to clean, convert, and format raw data ready for model use. A well arranged data processing pipe has:

Data Management:

- Data compiled from various databases, IoT devices, logs, and external APIs are gathered.

- Batch ingestion (e.g., Apache Spark) or real time streaming (e.g., Apache Kafka).

Data Cleansing and Preprocessing

- Dealing with duplicates, missing data, and extremes.

- Normalizing and standardizing information to ensure uniformity.

- Encoding of categorical features for ML model fit.

Property Engineering

- Generating fresh features improving model performance.

- For improving big data, dimensionality reduction methods like PCA would be used.

- Aggregating past information to create timeseries traits.

Data management and storage

- For storage of unprocessed data, use data lakes (e.g., AWS S3, Azure Data Lake).

- Structured analysis with data warehouses such Snowflake or Google BigQuery.

Validation and Training Model

- Organizing data into training, validation, and testing groups.

- Hyperparameter tuning working to maximize model performance.

Deployment and Monitoring

- Employing technologies like Apache Airflow to automate pipeline execution.

- Checking for data drift regularly to guarantee model accuracy over time.

- Security and compliance concerns are two different heights.

- PMaaS solutions have to be planned with security and regulation in mind to cover sensitive information and guarantee legal compliance.

Issues of security

- Encrypt data at rest and during transit by means such of AES256 and TLS protocols.

- Use role based access control (RBAC) and multifactor authentication (MFA) with access control.

- API Security: Prevent abuse by means of authentication (OAuth 2.0, JWT tokens) and rate limiting.

- Use best practices for container security and vulnerability scanning for a secure model deployment.

- Use security information and event management (SIEM) appliances to spot risks by means of monitoring and logging systems.

Considerations of compliance

Organizations have to follow sector regulations, including:

- European Union citizens&’ personal information are protected by the General Data Protection Regulation.

- CCPA (California Consumer Privacy Act) creates data privacy rights for California.

- SOC 2: sets privacy and security measures for service businesses.

- Furthermore, data residency regulations could mandate data to be stored and handled inside defined geographical boundaries.

Well liked Platforms and PMaaS Providers

Google Cloud AI Platform offers.

Fully managed, Google Cloud AI Platform lets data scientists and developers construct, train, and run machine learning (ML) models at scale. It comes with AutoML for automated model creation and integrates with TensorFlow, Scikit learn, and other ML platforms. Data labeling, hyperparameter tuning, and smooth data processing with Google’s cloud storage and BigQuery are some of the important aspects of this software.

Google SageMaker

A machine learning solution based in the cloud, Amazon SageMaker speeds up the creation, training, and delivery of ML models. It offers Jupyter notebooks for development, integrated algorithms, and features such SageMaker Autopilot for automated model selection. Realtime and batch inference are also supported by SageMaker, therefore it becomes a complete PMaaS (Predictive Model as a Service) solution for businesses.

Microsoft Azure Machine Learning

Running on the cloud based Azure platform, Azure Machine Learning (Azure ML) offers deployment features along with model training and automatic machine learning. With Azure ML Studio, this offers a drag and drop interface that supports well known ML frameworks and ties Azure’s cloud ecosystem for data storage and processing. Azure ML also provides monitoring and control of models in production via MLOps features.

Opensource, Custom PMaaS solutions

- Open source and bespoke PMaaS solutions provide many companies more flexibility and cost control.

- Built on Kubernetes, platforms such as Kubeflow, MLflow (model tracking and deployment), and H2O.ai provide free of charge PMaaS services.

Execution and Incorporation

Organizing a PMaaS Process

- To run accurately, Predictive Modeling as a Service (PMaaS) depends on a clearly defined flow. This organization comprises:

- Data ingestion: Gathering and preprocessing data from many sources.

- Model Selection and Training: Selection of the best forecast model and training it with past data.

- Deployment: Combining the trained model into a cloud based or local service.

- Checking model performance on a regular basis and keeping it compliant with company goals would follow the above noted updating.

Linking business uses with prediction models.

- Combining business programs with predictive models guarantees practical information. Usually, this link is made by:

- APIs and Web Services let models and business applications easily share information.

- Embedding forecasts into process automation applications such as CRM, ERP, or supply chain management programs is a feature of automated decision systems.

- User dashboards: visualized insights from business intelligence tools for decision makers.

Handling Real-time vs. Batch Predictions

Predictive models operate in either real-time or batch processing modes. The table below summarizes the differences:

| Feature | Real-time Predictions | Batch Predictions |

| Use Cases | Fraud detection, recommendation systems, chatbots | Demand forecasting, customer churn analysis |

| Data Processing | Processes individual data points instantly | Processes large volumes of data periodically |

| Latency | Low (milliseconds to seconds) | High (minutes to hours) |

| Computational Cost | High, requires continuous processing | Lower, scheduled processing reduces costs |

| Scalability | Requires robust infrastructure for high-speed processing | Easier to scale with cloud-based solutions |

Organizations must balance accuracy, speed, and computational cost when choosing between these approaches.

Challenges and Limitations of Prediction Models as a Service

Data Privacy and Security Concerns

Given that PMaaS is based on cloud data processing, organizations are confronted with:

- Increased risk of data breaches and unauthorized access.

- Compliance challenges with regulatory requirements (e.g., GDPR, HIPAA).

- Secure data sharing concerns, such as encryption and rigorous access control.

Cost and Scalability Issues

- Computational Costs: Operating large-scale models is costly, which puts up the price of cloud service.

- Scalability: As data volumes grow, models must efficiently scale to maintain performance. Organizations must optimize their architectures to manage these challenges cost-effectively.

Case Studies and Industry Applications

Healthcare Predictions (e.g., Disease Diagnosis)

PMaaS enables early disease detection and diagnosis through predictive analytics. For example:

- AI-based diagnostic tools analyze medical images for early cancer detection.

- Predictive modeling assesses patient risk for chronic diseases like diabetes.

- Hospital resource optimization forecasts patient admission rates.

Financial Risk and Fraud Detection

Prediction Models as a Service is utilized by financial institutions for:

- Credit scoring: Forecasting a customer’s default likelihood.

- Fraud detection: Real-time detection of suspicious transactions through anomaly detection models.

- Algorithmic trading: Investment decisions based on predictive analysis.

Retail and Customer Behavior Analysis

Retailers utilize predictive models to:

- Personalize marketing efforts through customer segmentation.

- Manage inventory through demand forecasting.

- Lower churn rates through customer attrition prediction.

Future Directions for Prediction Models as a Service

Developments in AutoML and Low-Code Platforms

Most prediction models today are actually coming from low-code/no-code solutions or Automated Machine Learning (AutoML) solutions, which truly transform the landscape of modeling. The steps “feature engineering”, “model selection”, and “hyperparameter tuning” are highly critical in that construction of a machine learning model, and in a way, these three things are automated for constructing and deploying machine learning models when they adopt an AutoML solution. Low-code solutions allow organizations that are not technical with AI to develop and deploy predictive models using easy drag-and-drop interfaces. All these advances make Prediction Models as a Service more feasible, enabling faster deployment and less reliance on data science skills.

On-device Predictions and Edge AI

According to NICE, Edge AI means running predictive models on edge devices. Examples of these edge devices include smartphones, IoT sensors, and embedded systems, instead of being dependent on cloud technology. Such advantages lead to less latency, better real-time decisions, and enhanced privacy as data is saved on the device. On-device prediction is, in turn, relevant to areas such as autonomous vehicles, medical monitoring, and industrial automation, where real-time intelligence plays a key role. As hardware improves, Prediction Models as a Service will be more integrated with edge AI solutions, delivering faster predictive analytics efficiently.

Ethical AI and capability to understand Prediction Models as a Service:

The dependency on AI predictions has increased much along with concern about bias, fairness, and transparency. Ethical AI subsequently means creating models of predictions that are fair, accountable, and less biased. The capability to comprehend and explain AI decisions, is very important because of compliance with regulations and assurance to users. Future Prediction Models as a Service products will have introduce their future Explainable AI (XAI) methods, such as SHAP (Shapley Additive explanations) and LIME (Local Interpretable Model-agnostic Explanations) into their products to allow understanding on model selection. This will give room for organizations to meet ethical qualifications and win the users’ goodwill.

The Role of Quantum Computing in Prediction Models

Quantum computing can transform prediction models by processing huge data sets exponentially quicker than conventional computers. Quantum machine learning (QML) algorithms are capable of improving optimization, pattern detection, and probabilistic modeling to make advanced predictions more efficient and accurate. Even though quantum computing is in its nascent stage, potential Prediction Models as a Service platforms in the future will utilize quantum algorithms to process high-dimensional data and make computationally intensive calculations that conventional models cannot.

Conclusion

- AutoML and low-code platforms are democratizing AI adoption through easing model creation and deployment.

- Edge AI and on-device predictions increase real-time decision-making, enhance privacy, and decrease latency.

- Ethical AI and explanation are becoming necessary for regulatory compliance and user trust in AI-driven decisions.

- Quantum computing promises to significantly enhance the efficiency and accuracy of prediction models.

Final Thoughts on Prediction Models as a Service Adoption

Far Pacific Prediction Models as a Service (PMaaS) are changing with innovations in automation, edge computing, ethics, and quantum technology. With AI-powered insights becoming more mainstream with companies, the Prediction Models as a Servicevendor must focus on accessibility, transparency, and efficiency. Those companies that will embrace the emerging trends will have a competitive advantage in making informed decisions, innovation also by predictive analytics.

Read more about Machine Learning from Technospheres.